Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Last substack for 2025 - may 2026 bring better tidings. Credit and/or blame to David Gerard for starting this.)

internet comment etiquette with erik just got off YT probation / timeout from when YouTube’s moderation AI flagged a decade old video for having russian parkour.

He celebrated by posting the below under a pipebomb video.

Hey, this is my son. Stop making fun of his school project. At least he worked hard on it. unlike all you little fucks using AI to write essays about books you don’t know how to read. So you can go use AI to get ahead in the workforce until your AI manager fires you for sexually harassing the AI secretary. And then your AI health insurance gets cut off so you die sick and alone in the arms of your AI fuck butler who then immediately cremates you and compresses your ashes into bricks to build more AI data centers. The only way anyone will ever know you existed will be the dozens of AI Studio Ghibli photos you’ve made of yourself in a vain attempt to be included. But all you’ve accomplished is making the price of my RAM go up for a year. You know, just because something is inevitable doesn’t mean it can’t be molded by insults and mockery. And if you depend on AI and its current state for things like moderation, well then fuck you. Also, hey, nice pipe bomb, bro.

@nfultz @BlueMonday1984 “I’d like to say, hey man nice shot. What a good shot, man!”

OT: Did you guys know they give cats mirtazapine as an appetite stimulant? (My guy is recovering from pneumonia and hasn’t been eating, so I’m really hoping this works).

deleted by creator

Rooting for your kitty!

Its working! I got him to eat!!!

Hell yeah 💪

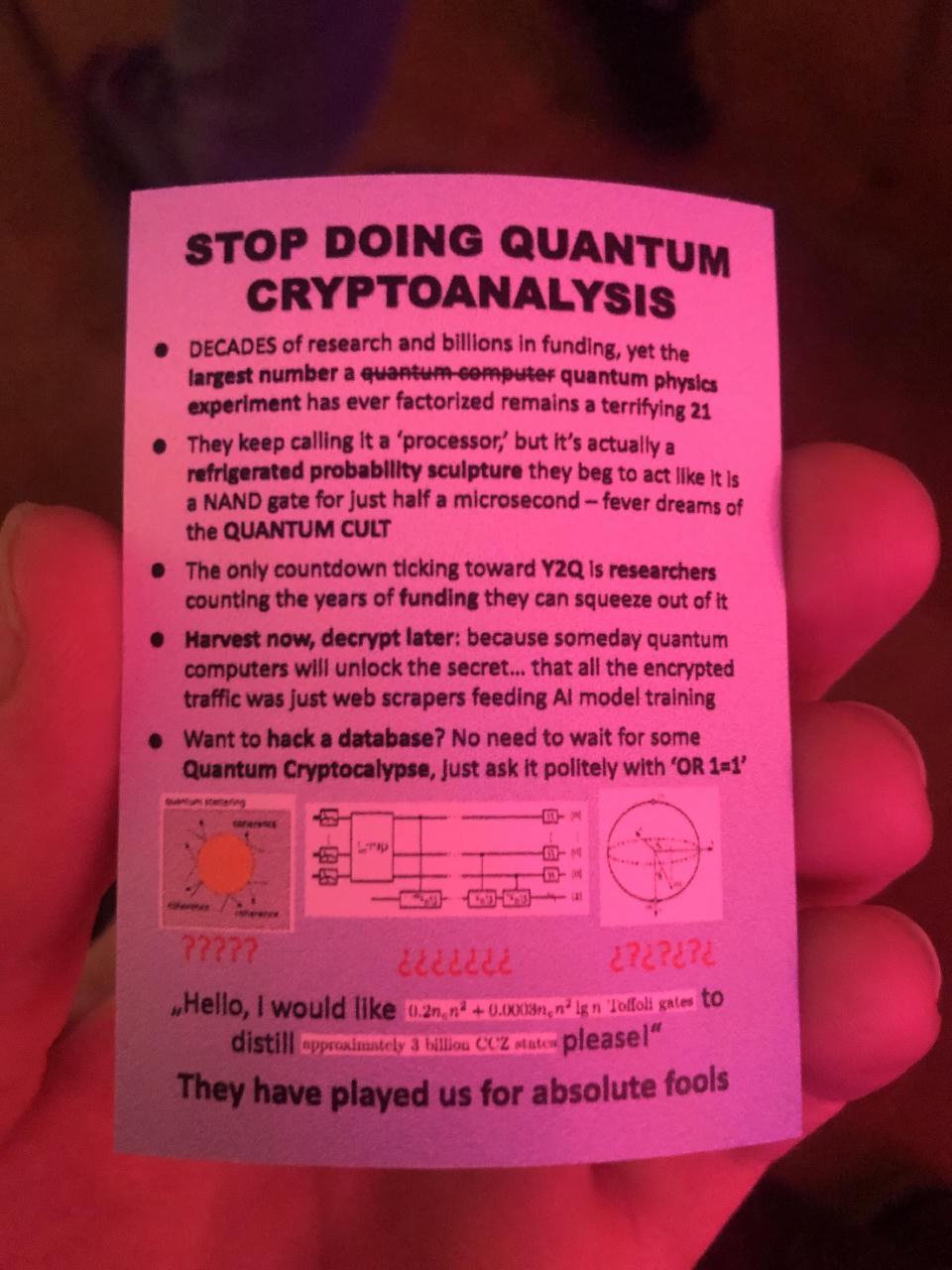

How about some quantum sneering instead of ai for a change?

They keep calling it a ‘processor,’ but it’s actually a refrigerated probability sculpture they beg to act like it Is a NAND gate for just half a microsecond

“Refrigerated probability sculpture” is outstanding.

Photo is from the recent CCC, but I can’t find where I found the image, sorry.

alt text

A photograph of a printed card bearing the text:

STOP DOING QUANTUM CRYPTOANALYSIS

- DECADES of research and billions in funding, yet the largest number a quantum computer quantum physics experiment has ever factorized remains a terrifying 21

- They keep calling it a ‘processor,’ but it’s actually a refrigerated probability sculpture they beg to act like it Is a NAND gate for just half a microsecond - fever dreams of the QUANTUM CULT

- The only countdown ticking toward Y2Q is researchers counting the years of funding they can squeeze out of it

- Harvest now, decrypt later: because someday quantum computers will unlock the secret… that all the encrypted traffic was just web scrapers feeding Al model training

- Want to hack a database? No need to wait for some Quantum Cryptocalypse, Just ask it politely with ‘OR 1=1’

(I can’t actually read the final bit, so I can’t describe it for you, apologies)

They have played us for absolute fools.

o7’s already posted it! https://awful.systems/post/6746032/9903043

Y2Q

I’m sorry, what does this stand for? Searching for it just results in usage without definition. I understand it’s refering to breaking conventional encryption, but it’s clearly an abbreviation of something, right? Years To Quantum? But then a countdown to it doesn’t make sense?

I think it’s a spin on Y2K. A hypothetical moment when quantum computing will break cryptography much like the year 2000 would have broken the datetime handling on some systems programmed with only the 20th century in mind.

But… But Y2K = Year 2000, like it’s an actual sensible acronym. You can’t just fucking replace K with Q and call it a day what the fuck, did ChatGPT come up with this??

It’s a stupid fucking name alright. I guess it’s a bit like any old scandal being noungate despite the Watergate scandal being named after the building and having nothing to do with water.

I mean it’s so stupid that you had to explain to me that it’s based on Y2K because it makes no sense

noungate

Yes, this is why we need to resist stupid names before they enter mainstream or the world will continue to get dumber

guess the USA invasion of Venezuela puts a flashing neon crosshair on Taiwan.

An extremely ridiculous notion that I am forced to consider right now is that it matters whether the CCP invades before or after the “AI” bubble bursts. Because the “AI” bubble is the biggest misallocation of capital in history, which means people like the MAGA government are desperate to wring some water out of those stones, anything. And for various economical reasons it isn’t doable at the moment to produce chips anywhere else than Taiwan. No chips, no “AI” datacenters, and they promised a lot of AI datacenters—in fact most of the US GDP “growth” in 2025 was promises of AI datacenters, if you don’t count these promises the country is already in recession.

Basically I think if the CCP invades before the AI bubble pops, MAGA would escalate to full-blown war against China to nab Taiwan as a protectorate. And if we all die in nuclear fallout caused to protect chatbot profits I will be so over this whole thing

@mirrorwitch @BlueMonday1984 nah, quite a few people will stay alive to continue to be this miserable experience where certain people have to clean up after such fuck ups.

Said that, AI bubble burst will be glorious. Shit scary, but glorious.@mirrorwitch I note that China is on the verge of producing their own EUV lithography tech (they demo’d it a couple of months back) so TSMC’s near-monopoly is on the edge of disintegrating, which means time’s up for Taiwan (unless they have some strategic nukes stashed in the basement).

If China *already* has EUV lithography machines they could plausibly reveal a front-rank semiconductor fab-line—then demand conditional surrender on terms similar to Hong Kong.

Would Trump follow through then?

@cstross @mirrorwitch Having the fab is worthless. (Nearly. They’re expensive to build.) The irreplaceable thing is the specific people and the community of practice. (Same as with a TCP/IP stack that works in the wild, or bind; this is really hard to do and the accumulated knowledge involved in getting where it is now is a full career thing to acquire and brains are rate-limited.)

China most probably doesn’t have that yet.

That is, however, not in any way the point. Unification is an axiom.

@graydon @cstross @mirrorwitch I’ve had to be an expert in this stuff for decades. Which has imparted a particular bit of knowledge.

That being: CHINA FUCKING LIES ALL THE TIME. Just straight up bald-faced lying because they must be *perceived* as super-advanced.

Even stealing as much IP as they possibly can, China is many years from anything competitive. Their most advanced is CXMT, which was 19nm in '19, and had to use cheats and espionage to get to 10nm-class.@graydon @cstross @mirrorwitch are they on the verge of their own EUV equipment? Not even remotely close. It took ASML billions and decades. And their industries are built on IP theft. That’s not jingoism; that’s first-hand experience. Just as taking shortcuts and screwing foreigners is celebrated.

I’ve sampled CXMT’s 10G1 parts. They’re not competitive. They claim 80% yield (very low) at 50k WPM. Seems about right, as 80% of the DIMMs actually passed validation.

@graydon @cstross @mirrorwitch so yes, that very much creates a disincentive to bomb their perceived enemies out of existence. For all the talk, they are fully aware of the state of things and that they are not domestically capable of getting anywhere near TSMC.

At the same time though, they are also monopolists. They engage in dumping to drive competitors out of business. So forcing the world to buy sub-standard parts from them is a good thing.So it comes down to Winnie the Pooh’s mood.

@cstross @mirrorwitch In a bunch of ways, the unspeakable 19th and 20th centuries of Chinese history are constructed as the consequences of powerlessness; the point is to do a magic to abolish all traces of powerlessness.

Retaking control of Taiwan is not a question and cannot be a question. Policy toward Taiwan is not what Hong Kong got, they’re going to get what the Uyghur are getting. (The official stance on democracy is roughly the medieval Church’s stance on heresy.)

the medieval Church’s stance on heresy

Im not an expert on this, but wasnt this period not that bad and it was more the early modern period where the trouble really started? (Esp the witch hunts, and also the organized church was actually not as bad re the witch hunts, the Spanish inquisition didn’t consider confessions gotten via torture valid for example, and it was an early modern thing). The medieval period tends to get a bad rap.

@Soyweiser You’ve forgotten the Crusades, right? Right? Or the Clifford’s Tower Massacre (to get hyper-specific in English history) and similar events all over Europe? Or the Reconquista and the Alhambra Decree?

The crusades/Reconquusta were more an externally aimed thing at the Muslims right? (at least in intent from the organized church side, in practice not so much, so im not talking about those rampages). So yeah I was specifically talking about heresies, and im also very much not an expert in these things, so I dont know. I have not forgotten about the Cliffords/ /Alhambra things, as I dont know about it (I will look them up when im not phone posting). I was thinking more about stuff like protestantism, witch hunts and Jan Hus (the latter does count, as it is from the late medieval period iirc).

I just dont know very much about the period, but do knew some wiccan types who had wild ahistorical stories about the witch hunts.

E: yeah, I don’t think we should put anti-semitism under anti-heresy stuff, it being its own religion and all that. But as Graydon mentioned, the Albigensian Crusade fully counts for all my weird hangups and so I was totally wrong.

@Soyweiser @techtakes Nope. The Albigensian Crusade rampaged through the Languedoc (southern France, as it is now) and genocided the Cathars. Numerous lesser organized pogroms massacred Jews al fresco and butchered Muslims and Pagans living under Christian rule. The Alhambra Decree outlawed Islam and Judaism in Spain and set up a Holy Inquisition to persecute them: Richard III expelled all the Jews from England (he owed some of them money): and so on.

@Soyweiser https://en.wikipedia.org/wiki/Albigensian/_Crusade

Try finding some Cathar writings.

While I think it’s entirely fair to say that the medieval period gets a bad rap in terms of equating feudalism to the later god-king aristocracies, it’s not in any way unfair to say the medieval church reacted to heresy with violence. (Generally effective and overwhelming violence; if you’re claiming sole moral authority you can’t really tolerate anyone questioning your position.)

Thanks, yeah, as I also said to Stross, I dont know that much about the period. Most of it comes from Crusader Kings ;), and the religion mechanics there are not that historically accurate, so I dont put not much stock in that apart form ‘some people believed in a religion named like this once’.

Anyway thanks both for correcting me and giving me homework (ill read up on it, any more specifics about the Cathar stuff would be appreciated, as I wouldn’t know where to start).

@Soyweiser The Cathars are the heretics being extirpated during the Albigensian Crusade.

I wouldn’t say having the fab is worthless, but more that saying you have build one and it actually producing as specced, at scale, and not producing rubbish is hard. From what I got talking to somebody who knew a little bit more than me who had had contact with ASML these fabs take ages to construct properly and that is also quite hard. Question will ve how far they are on all this, a tech demo can be quite far off from that. They have been at it for a while now however.

Wonder if the fight between Nexperia (e: called it nxp here furst by accident apologies) and China also means they are further along on this path or not. Or if it is relevant at all.

Small brain: this ai stuff isn’t going away, maybe I should invest in openAI and make a little profit along the way

Medium brain: this ai stuff isn’t going away, maybe I should invest in power companies as producing and selling electricity is going to be really profitable

Big brain: this ai stuff isn’t going away, maybe I should invest in defense contractors that’ll outfit the US’s invasion of Taiwan…

invest

If you are broadly invested in US stocks, you are already invested in the chatbot bubble and the defense industry. If you are worried about that, an easy solution is to move some of that money elsewhere.

Big brain: this ai stuff isn’t going away, maybe I should invest in defense contractors that’ll outfit the US’s invasion of Taiwan…

Considering Recent Events™, anyone outfitting America’s gonna be making plenty off a war in Venezuela before the year ends.

" And if we all die in nuclear fallout caused to protect chatbot profits I will be so over this whole thing"

That’s a great line.

And they don’t even turn a profit.

You can’t take TSMC by force. Any fighting there would trash the fabs, and anyway you need imported equipment to keep it running. So if China did invade Taiwan and wreck it, there’d be little point in trying to take it back.

A journalist attempts to ask the question “Why Do Americans Hate A.I.?”, and shows their inability to tell actually useful tech from lying machines:

Bonus points for gaslighting the public on billionaires’ behalf:

These worries are real. But in many cases, they’re about changes that haven’t come yet.

Of all the statements that he could have made, this is one of the least self-aware. It is always the pro-AI shills who constantly talk about how AI is going to be amazing and have all these wonderful benefits next year (curve go up). I will also count the doomers who are useful idiots for the AI companies.

The critics are the ones who look at what AI is actually doing. The informed critics look at the unreliability of AI for any useful purpose, the psychological harm it has caused to many people, the absurd amount of resources being dumped into it, the flimsy financial house of cards supporting it, and at the root of it all, the delusions of the people who desperately want it to all work out so they can be even richer. But even people who aren’t especially informed can see all the slop being shoved down their throats while not seeing any of the supposed magical benefits. Why wouldn’t they fear and loathe AI?

These worries are real. But in many cases, they’re about changes that haven’t come yet.

famously, changes that have already happened and become entrenched are easier to reverse than they would have been to just prevent in the first place. What an insane justification

A few weeks ago, David Gerard found this blog post with a LessWrong post from 2024 where a staffer frets that:

Open Phil generally seems to be avoiding funding anything that might have unacceptable reputational costs for Dustin Moskovitz. Importantly, Open Phil cannot make grants through Good Ventures to projects involved in almost any amount of “rationality community building”

So keep whisteblowing and sneering, its working.

Sailor Sega Saturn found a deleted post on https://forum.effectivealtruism.org/users/dustin-moskovitz-1 where Moskovitz says that he has moral concerns with the Effective Altruism / Rationalist movement not reputation concerns (he is a billionaire executive so don’t get your hopes up)

All of the bits I quoted in my other comment were captured by archive.org FWIW: a, b, c. They can also all still be found as EA forum comments via websearch, but under [anonymous] instead of a username.

This newer archive also captures two comments written since then. Notably there’s a DOGE mention:

But I can’t e.g. get SBF to not do podcasts nor stop the EA (or two?) that seem to have joined DOGE and started laying waste to USAID. (On Bsky, they blame EAs for the whole endeavor)

The February 2024 Medium post by Moskovitz objects to cognitive decoupling as an excuse to explore eugenics and says that Eliezer Yudkowsky seems unreasonably confident in immanent AI doom. It also notes that Utilitarianism can lead ugly places such as longtermism and Derek Parfit’s repugnant conclusion. In the comments he mentions no longer being convinced that its as useful to spend on insect welfare as on “chicken, cow, or pig welfare.” He quotes Julia Galef several times. A choice quote from his comments on forum.effectivealtruism.org:

If the (Effective Altruism?) brand wasn’t so toxic, maybe you wouldn’t have just one foundation like us to negotiate with, after 20 years?

Does anyone have an explainer on the supposed DOGE/EA connection? All I can find is this dude with a blo wobbling back and forth with LessWrong flavoured language https://www.statecraft.pub/p/50-thoughts-on-doge (he quotes Venkatesh Rao and Dwarkesh Patel who are part of the LessWrong Expanded Universe).

The bluesky reference may be about this thread & this thread.

One of the replies names Cole Killian as an EA involved with DOGE. The image is dead but has alt text.

I mean there’s at least one. You could “no-true-scotsman” him, but between completing an EA fellowship and going vegan, he seems to fit a type. [A vertical screenshot of an archive.org snapshot of Cole Killian’s website, stating accomplishments. Included in the list are “completed the McGill effective altruism fellowship” and “went vegan and improved cooking skills”]

Two of the bsky posts are log-in only. Huh, Killian is in to Decentralized Autonomous Organizations (blockchain), high-frequency trading (like our friends at Jane Street), veganism, and Effective Altruism?

Two of the bsky posts are log-in only

if you’re going to do internet research, at this point it’s a skill issue. Create a reader account.

I could one day but nitter and the Wayback Machine and public tools have gotten me this far!

There’s also skyview.social, which I personally use since I am not interested in signing up.

Here’s another interesting quote from the now deleted webpage archive: https://old.reddit.com/r/mcgill/comments/1igep4h/comment/masajbg/

My name is Cole. Here’s some quick info. Memetics adjacence:

Previously - utilitarianism, effective altruism, rationalism, closed individualism

Recently - absurdism, pyrrhonian skepticism, meta rationalism, empty individualism

Sounds like a typical young make seeker (with a bit of épater les bourgeois). Not the classic Red Guard personality but it served Melon Husk’s needs.

I was unable to follow the thread of conversation from the archived links, so here is the source in case anyone cares.

Does anyone know when Dustin deleted his EA forums account? Did he provide any additional explanation for it?

Rich Hickey joins the list of people annoyed by the recent Xmas AI mass spam campaign: https://gist.github.com/richhickey/ea94e3741ff0a4e3af55b9fe6287887f

LOL @ promptfondlers in comments

aww those got turned off by the time I got to look :(

It’s a treasure trove of hilariously bad takes.

There’s nothing intrinsically valuable about art requiring a lot of work to be produced. It’s better that we can do it with a prompt now in 5 seconds

Now I need some eye bleach. I can’t tell anymore if they are trolling or their brains are fully rotten.

these fucking people: “art is when picture matches words in little card next to picture”

Don’t forget the other comment saying that if you hate AI, you’re just “vice-signalling” and “telegraphing your incuruosity (sic) far and wide”. AI is just like computer graphics in the 1960s, apparently. We’re still in early days guys, we’ve only invested trillions of dollars into this and stolen the collective works of everyone on the internet, and we don’t have any better ideas than throwing more

moneycompute at the problem! The scaling is still working guys, look at these benchmarks that we totally didn’t pay for. Look at these models doing mathematical reasoning. Actually don’t look at those, you can’t see them because they’re proprietary and live in Canada.In other news, I drew a chart the other day, and I can confidently predict that my newborn baby is on track to weigh 10 trillion pounds by age 10.

Neom update:

Description:

A Lego set on the clearence shelf. It’s an offroad truck that has Neom badges on it.

So Neom is one of those zany planned city ideas right?

Why… why do they need a racing team? Why does the racing team need a lego set? Who is buying it for 27 dollars? (Well apparently the answer to that last question is nobody).

Anyway a random thought I had about these sorts of silly city projects. Their website says:

NEOM is the building the foundations for a new future - unconstrained by legacy city infrastructure, powered by renewable energy and prioritizing the conservation of nature. We are committed to developing the region to the highest standards of sustainability and livability.

(emphasis mine)

This is a weird worldview. The idea that you can sweep existing problems under the rug and start new with a blank slate.

No pollution (but don’t ask about how Saudi Arabia makes money), no existing costly “legacy” infrastructure to maintain (but don’t ask about how those other cities are getting along), no undesirables (but don’t worry they’re “complying with international standards for resettlement practices”*).

They assumes there’s some external means of supplying money, day workers, solar panels, fuel, food, etc. As long as their potemkin village is “sustainable” and “diverse” on the first order they don’t have to think about that. Out of sight, out of mind. Pretty similar to the libertarian citadel fever dreams in a way.

* Actual quote from their website eurrgh, which even itself looks like a lie

“Why… why do they need a racing team? Why does the racing team need a lego set? Who is buying it for 27 dollars? (Well apparently the answer to that last question is nobody).”

Apparently NEOM is sponsoring some McLaren Formula E teams. (Formula E being electric). Google Pixel, Tumi luggage, and the UK Ministry of Defence are other sponsors, but NEOM seems to be the major sponsor.

I assume the market for these is not so much NEOM fans but rather McLaren fans.

As to why NEOM is sponsoring it, I think it’s a bit of Saudi boosterism or techwashing to help MBS move past the whole bone saw thing.

NEOM is the building the foundations for a new future - unconstrained by legacy city infrastructure, powered by renewable energy and prioritizing the conservation of nature. We are committed to developing the region to the highest standards of sustainability and livability.

lol, this is saudi, they found a way to make half of their water supply to riyadh nonrenewable

The best way of conserving nature is to build a ginormous wall 110 miles long and 1,600 feet high that utterly destroys wildlife’s ability to traverse territory it has been traversing for eons. It is known.

I hear ya!

I guess Neom is what happens when a billionaire in the desert gets infected by the seastedding brainworms.

Dubai famously doesn’t have a sewage pipe system, human waste is loaded onto trucks that spend hours waiting to offload it in the only sewage treatment plant available.

Dubai is in the United Arab Emirates, not Saudi Arabia.

I’d wager an ounce of gold that the general attitude towards sustainability and the environment is 100% aligned among the rulers of both states.

The Soylent meal replacement thing apparently forgot some important micro nutrients.

NEOM is a laundry for money, religion, genocidal displacement, and the Saudi reputation among Muslims. NEOM is meant to replace Wahhabism, the Saudi family’s uniquely violent fundamentalism, with a much more watered-down secularist vision of the House of Saud where the monarchs are generous with money, kind to women, and righteously uphold their obligations as keepers of Mecca. NEOM is not only The Line, the mirrored city; it is multiple different projects, each set up with the Potemkin-village pattern to assure investors that the money is not being misspent. In each project, the House of Saud has targeted various nomads and minority tribes, displacing indigenous peoples who are inconvenient for the Saudi ethnostate, with the excuse that those tribes are squatting on holy land which NEOM’s shrines will further glorify.

They want you to look at the smoke and mirrors in the desert because otherwise you might see the blood of refugees and the bones of the indigenous. A racing team is one of the cheaper distractions.

aiui they also really don’t like eyes on the modern slave labour they’re using to build it all

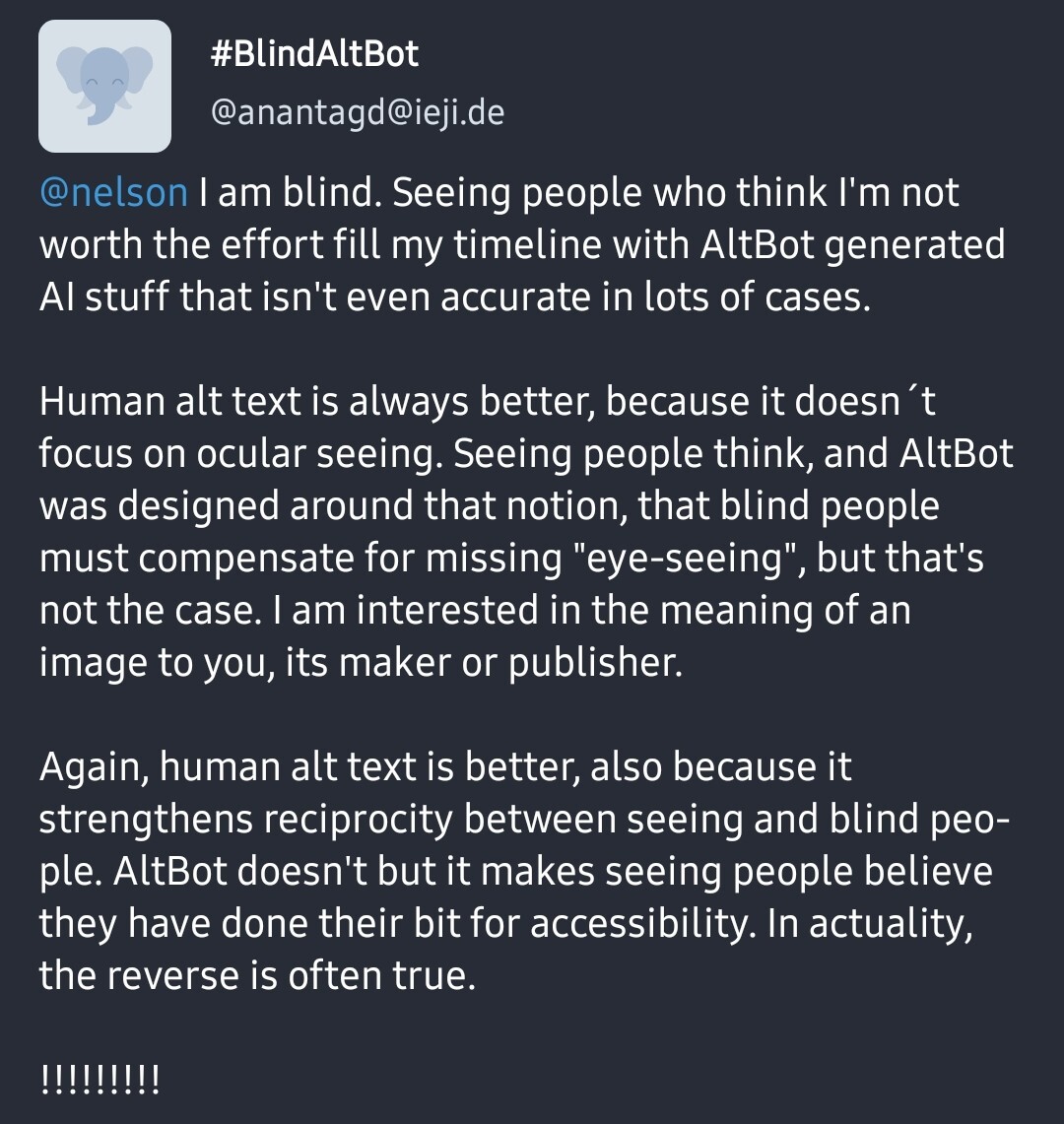

The developer of an LLM image description service for the fediverse has (temporarily?) turned it off due to concerns from a blind person.

Link to the thread in question

Good for them

Good for them. Not quite abandoning the project and deleting it, but its a good move from them nonetheless.

https://github.com/leanprover/lean4/blob/master/.claude/CLAUDE.md

Imagine if you had to tell people “now remember to actually look at the code before changing it.” – but I’m sure LLMs will replace us any day now.

Also lol this sounds frustrating:

Update prompting when the user is frustrated: If the user expresses frustration with you, stop and ask them to help update this .claude/CLAUDE.md file with missing guidance.

Edit: I might be misreading this but is this signs of someone working on an LLM driven release process? https://github.com/leanprover/lean4/blob/master/.claude/commands/release.md ??

Important Notes: NEVER merge PRs autonomously - always wait for the user to merge PRs themselves

Yes, they are trying to automate releases.

sidenote: I don’t like how taking an approach of mediocre software engineering to mathematics is becoming more popular. Update your dependency (whose code you never read) to v0.4.5 for bug fixes! Why was it incorrect in the first place? Anyway, this blog post sets some good rules for reviewing computer proofs. The second-to-last comment tries to argue npm-ification is good actually. I can’t tell if satire

I don’t like how taking an approach of mediocre software engineering to mathematics is becoming more popular

would you be willing to elaborate on this? i am just curious because i took the opposite approach (started as a mathematician now i write bad python scripts)

The flipside to that quote is that computer programs are useful tools for mathematicians. See the mersenne prime search, OEIS and its search engine, The L-function database, as well as the various python scripts and agda, rocq, lean proofs written to solve specific problems within papers. However, not everything is perfect: throwing more compute at the problem is a bad solution in general; the stereotypical python script hacked together to serve only a purpose has one-letter variable names and redundant expressions, making it hard to review. Throw in the vibe coding over it all, and that’s pretty much the extent of what I mean.

I apologize if anything is confusing, I’m not great at communication. I also have yet to apply to a mathematics uni, so maybe this is all manageable in practice.

One important nuance is that there are, broadly speaking, two ways to express a formal proof: it can either be fairly small but take exponential time to verify, or it can be fairly quick to verify but exponentially large. Most folks prefer to use the former sort of system. However, with extension by definitions, we can have a polynomial number of polynomially-large definitions while still verifying quickly. This leads to my favorite proof system, Metamath, whose implementations measure their verification speed in kiloproofs/second. If you give me a Metamath database then I can quickly confirm any statement in a few moments with multiple programs and there is programmatic support for looking up the axioms associated with any statement; I can throw more compute at the problem. While LLMs do know how to generate valid-looking Metamath in context, it’s safe to try to verify their proofs because Metamath’s kernel is literally one (1) string-handling rule.

This is all to reconfirm your impression that e.g. Lean inherits a “mediocre software engineering” approach. Junk theorems in Lean are laughably bad due to type coercions. The wider world of HOL is more concerned with piles of lambda calculus than with writing math proofs. Lean as a general-purpose language with I/O means that it is no longer safe to verify untrusted proofs, which makes proof-carrying Lean programs unsafe in practice.

@Seminar2250@awful.systems you might get a laugh out of this too. FWIW I went in the other direction: I started out as a musician who learned to code for dayjob and now I’m a logician.

Thank you for the links

Junk theorems in Lean are laughably bad due to type coercions.

Those look suspicious… I mean when you consider that the set of propositions is given a topology and an order, “The set

{z : ℝ | z ≠ 0}is a continuous, non-monotone surjection.” doesn’t seem so ridiculous after all. Similarly the determinant of logical operations gains meaning on a boolean algebra. Zeta(1) is also by design. It does start getting juicy around “2 - 3 = +∞” and the nontransitive equality and the integer interval.Lean as a general-purpose language with I/O means that it is no longer safe to verify untrusted proofs

This is darkly funny.

no need to apologize, i understand what you mean. my experience with mathematicians has been that this is really common. even the theoretical computer scientists (the “lemma, theorem, proof” kind) i have met do this kind of bullshit when they finally decide to write a line of code. hell, their pseudocode is often baffling — if you are literally unable to run the code through a machine, maybe focus on how it comes across to a human reader? nah, it’s more important that i believe it is technically correct and that no one else is able to verify it.

So many CRITICAL and MANDATORY steps in the release instruction file. As it always is with AI, if it doesn’t work, just use more forceful language and capital letters. One more CRITICAL bullet point bro, that’ll fix everything.

Sadly, I am not too surprised by the developers of Lean turning towards AI. The AI people have been quite interested in Lean for a while now since for them, it is a useful tool to have AIs do math (and math = smart, you know).

The whole culture of writing “system prompts” seems utterly a cargo-cult to me. Like if the ST: Voyager episode “Tuvix” was instead about Lt. Barclay and Picard accidentally getting combined in the transporter, and the resulting sadboy Barcard spent the rest of his existence neurotically shouting his intricately detailed demands at the holodeck in an authoritative British tone.

If inference is all about taking derivatives in a vector space, surely there should be some marginally more deterministic method for constraining those vectors that could be readily proceduralized, instead of apparent subject-matter experts being reduced to wheedling with an imaginary friend. But I have been repeatedly assured by sane, sober experts that it is just simply is not so

When I first learned that you could program a chatbot merely by giving instructions in English sentences as if it was a human being, I admit I was impressed. I’m a linguist, natural language processing is really hard. There was a certain crossing over boundaries over the idea of telling it at chatbot level, e.g. “and you will never delete files outside this directory”, and this “system prompt” actually shaping the behaviour of the chatbot. I don’t have much interest in programming anymore but I wondered how this crossing of levels was implemented.

The answer of course is that it’s not. Programming a chatbot by talking to it doesn’t actually work.

I don’t have any good lay literature, but get ready for “steering vectors” this year. It seems like two or three different research groups (depending on whether I count as a research group) independently discovered them over the past two years and they are very effective at guardrailing because they can e.g. make slurs unutterable without compromising reasoning. If you’re willing to read whitepapers, try Dunefsky & Cohan, 2024 which builds that example into a complete workflow or Konen et al, 2024 which considers steering as an instance of style transfer.

I do wonder, in the engineering-disaster-podcast sense, exactly what went wrong at OpenAI because they aren’t part of this line of research. HuggingFace is up-to-date on the state of the art; they have a GH repo and a video tutorial on how to steer LLaMA. Meanwhile, if you’ll let me be Bayesian for a moment, my current estimate is that OpenAI will not add steering vectors to their products this year; they’re already doing something like it internally, but the customer-facing version will not be ready until 2027. They just aren’t keeping up with research!

Great. Now we’ll need to preserve low-background-radiation computer-verified proofs.

It reminds me of the bizzare and ill-omened rituals my ancestors used to start a weed eater.

One of my old teachers would send documents to the class with various pieces of information. They were a few years away from retirement and never really got word processors. They would start by putting important stuff in bold. But some important things were more important than others. They got put in bold all caps. Sometimes, information was so critical it got put in bold, underline, all caps and red font colour. At the time we made fun of the teacher, but I don’t think I could blame them. They were doing the best they could with the knowledge of the tools they had at the time.

Now, in the files linked above I saw the word “never” in all caps, bold all caps, in italics and in a normal font. Apparently, one step in the process is mandatory. Are the others optional? This is supposed to be a procedure to be followed to the letter with each step being there for a reason. These are supposed computer-savvy people

CRITICAL RULE: You can ONLY run

release_steps.pyfor a repository ifrelease_checklist.pyexplicitly says to do so […] The checklist output will say “Runscript/release_steps.py {version} {repo_name}to create it”I’ll admit I did not read the scripts in detail but this is a solved problem. The solution is a script with structured output as part of a pipeline. Why give up one of the only good thing computers can do: executing a well-defined task in a deterministic way. Reading this is so exhausting…

A lot of the money behind lean is from microsoft, so a push for more llm integration is depressing but unsurprising.

Turns out though that llms might actually be ok for generating some kinds of mathematical proofs so long as you’ve formally specified the problem and have a mechanical way to verify the solution (which is where lean comes in). I don’t think any other problem domain that llms have been used in is like that, so successes here can’t be applied elsewhere. I also suspect that a much, uh, leaner specialist model would do just as good a job there. As always, llms are overkill that can only be used when someone else is subsidising them.

Lol “important notes” is this the new “I’m a sign, not a cop”?

If you have to use an LLM for this (which no one needs to), at least run it as an unprivileged user that spits out commands that you then need to approve not with full access to drop the production database and a “pretty please don’t”

When you go so hard you Hadamard

https://wandering.shop/@flub@mastodon.social/115813129402677936

Happy new year everybody. They want to ban fireworks here next year so people set fires to some parts of Dutch cities.

Unrelated to that, let 2026 be the year of the butlerian jihad.

deleted by creator

Another video on Honey (“The Honey Files Expose Major Fraud!”) - https://www.youtube.com/watch?v=qCGT_CKGgFE

Shame he missed cyber monday by a couple weeks.

Also 16:35 haha ofc it’s just json full of regexes.

They avoid the classic mistake of forgetting to escape

.in the URL regex. I’ve made that mistake before…Like imagine you have a mission critical URL regex telling your code what websites to trust as

https://www.trusted-website.net/.*but then someone comes along and registers the domain namehttps://wwwwtrusted-website.net/. I’m convinced that’s some sort of niche security vulnerability in some existing system but no one has ran into it yet.None of this comment is actually important. The URL regexes just gave me work flashbacks.

a couple weeks back I had a many-rounds support ticket with a network vendor, querying exactly the details of their regex implementation. docs all said PCRE, actual usage attempt indicated….something very much else. and indeed it was because of

.that I found it

A rival gang of “AI” “researchers” dare to make fun of Big Yud’s latest book and the LW crowd are Not Happy

Link to takedown: https://www.mechanize.work/blog/unfalsifiable-stories-of-doom/ (hearbreaking : the worst people you know made some good points)

When we say Y&S’s arguments are theological, we don’t just mean they sound religious. Nor are we using “theological” to simply mean “wrong”. For example, we would not call belief in a flat Earth theological. That’s because, although this belief is clearly false, it still stems from empirical observations (however misinterpreted).

What we mean is that Y&S’s methods resemble theology in both structure and approach. Their work is fundamentally untestable. They develop extensive theories about nonexistent, idealized, ultrapowerful beings. They support these theories with long chains of abstract reasoning rather than empirical observation. They rarely define their concepts precisely, opting to explain them through allegorical stories and metaphors whose meaning is ambiguous.

Their arguments, moreover, are employed in service of an eschatological conclusion. They present a stark binary choice: either we achieve alignment or face total extinction. In their view, there’s no room for partial solutions, or muddling through. The ordinary methods of dealing with technological safety, like continuous iteration and testing, are utterly unable to solve this challenge. There is a sharp line separating the “before” and “after”: once superintelligent AI is created, our doom will be decided.

LW announcement, check out the karma scores! https://www.lesswrong.com/posts/Bu3dhPxw6E8enRGMC/stephen-mcaleese-s-shortform?commentId=BkNBuHoLw5JXjftCP

A few comments…

We want to engage with these critics, but there is no standard argument to respond to, no single text that unifies the AI safety community.

Yeah, Eliezer had a solid decade and a half to develop a presence in academic literature. Nick Bostrom at least sort of tried to formalize some of the arguments but didn’t really succeed. I don’t think they could have succeeded, given how speculative their stuff is, but if they had, review papers could have tried to consolidate them and then people could actually respond to the arguments fully. (We all know how Eliezer loves to complain about people not responding to his full set of arguments.)

Apart from a few brief mentions of real-world examples of LLMs acting unstable, like the case of Sydney Bing, the online appendix contains what seems to be the closest thing Y&S present to an empirical argument for their central thesis.

But in fact, none of these lines of evidence support their theory. All of these behaviors are distinctly human, not alien.

Even with the extent that Anthropic’s “research” tends to be rigged scenarios acting as marketing hype without peer review or academic levels of quality, at the very least they (usually) involve actual AI systems that actually exist. It is pretty absurd the extent to which Eliezer has ignored everything about how LLMs actually work (or even hypothetically might work with major foundational developments) in favor of repeating the same scenario he came up with in the mid 2000s. Or even tried mathematical analyses of what classes of problems are computationally tractable to a smart enough entity and which remain computationally intractable (titotal has written some blog posts about this with material science, tldr, even if magic nanotech was possible, an AGI would need lots of experimentation and can’t just figure it out with simulations. Or the lesswrong post explaining how chaos theory and slight imperfections in measurement makes a game of pinball unpredictable past a few ricochets. )

The lesswrong responses are stubborn as always.

That’s because we aren’t in the superintelligent regime yet.

Y’all aren’t beating the theology allegations.

Yeah, Eliezer had a solid decade and a half to develop a presence in academic literature. Nick Bostrom at least sort of tried to formalize some of the arguments but didn’t really succeed.

(Guy in hot dog suit) “We’re all looking for the person who didn’t do this!”

I clicked through too much and ended up finding this. Congrats to jdp for getting onto my radar, I suppose. Are LLMs bad for humans? Maybe. Are LLMs secretly creating a (mind-)virus without telling humans? That’s a helluva question, you should share your drugs with me while we talk about it.