Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

After finding out about her here, I’ve been watching a lot of Angela Collier videos lately, here’s the most recent one, which talks about our life extending friends.

She also said she basically wants to focus less on the sort of ‘callout’ content which does well on yt and more focus on actual physics stuff. Which is great, and also good she realized how slippery a slide that sort of content is for your channel.

(I mentioned before how sad it is to see ‘angry gamer culture war’ channels be stuck in that sort of content, as when they do non rage shit, nobody watches them. (I mean sad for them in an ‘if i was them’ way btw, dont get me wrong, fuckem for chosing that path (and fuck the system for that they are now financially stuck in that, and that they made this an available path anyway (while making it hard for lgbt people to make a channel about their experiences)), so many people hurt/radicalized for a few clicks and ad money))

she’s great

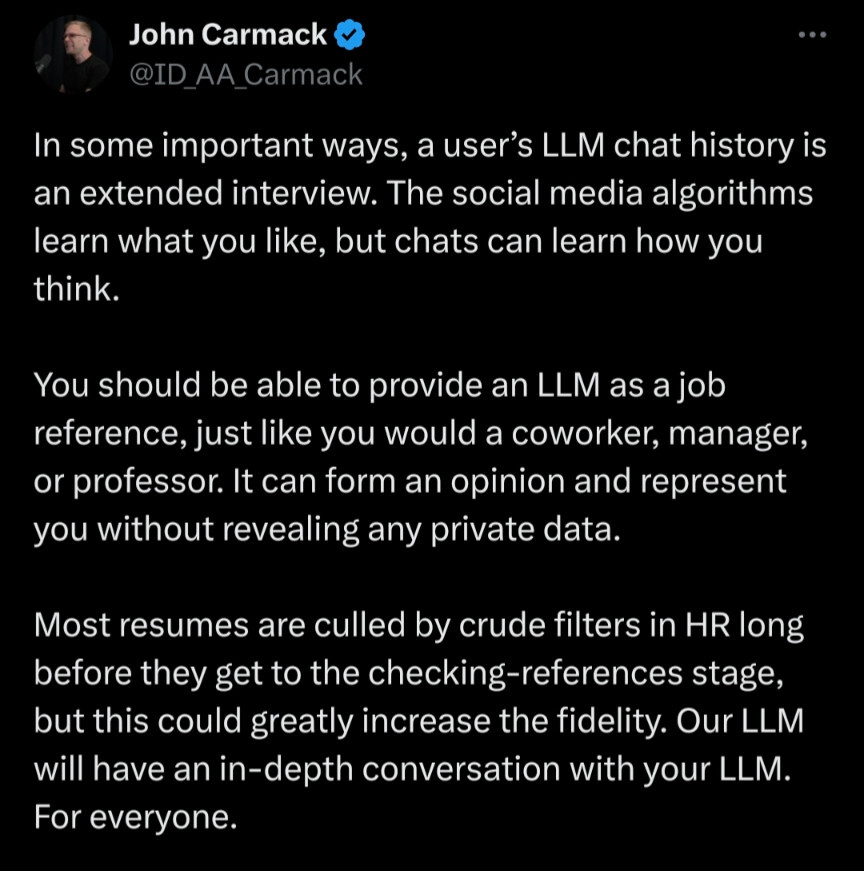

“you should be able to provide an LLM as a job reference”

source https://x.com/ID_AA_Carmack/status/1998753499002048589

Sure John, let me know when you’ve got that set up. Something that retains my entire search/chat history, caches the responses as well, and pulls all that into the context window when it’s time to generate a job referral. Maybe you’ll be able to do something shotgunning together remaindered hardware this time next year? I’ll be waiting.

I’m legitimately disappointed in John Carmack here. He should be a good enough programmer to understand the limitations here, but I guess his business career has driven in a different direction.

Nah he has brainrot. He deadnamed and misgendered Rebecca Heinemann in his eulogy of her. Transphobia seems to really make people worse at thinking.

(Not a big shock considering how bad his answer was towards the ‘epic own’ of the person asking him if he would hire more women. (He said: “we are having a hard time hiring all the people that we want. It doesn’t matter what they look like.”, which sounds like a great own, but leaves one big question. Why aren’t you trying to educate more people in what you want then? What are you doing to fix that problem if it is such a big problem for you? (which then leads to, how will you ensure that this teaching system has women in it, etc)))

Carmack is a Gen-X Texan mostly known for using hardmode C to code an FPS. He’s so drenched in nerd testostorone I’d be surprised if he wasn’t background radiation level non-woke. Not saying he shouldn’t be as a human being, just that it would be an uphill battle for him.

At least we have Romero. Hoe had an edgelord phase, but only when younger and realized he fucked up.

Something intensely satisfying seeing this the same week as id software becoming a union shop.

What, that doesnt even make sense. I dont type what im thinking. (My only real usage of llms was trying to break them, so imagine the output of that. And you could then imagine what you think I was trying to attempt in messing with the system. But then you would do the work. Say you see mee send the same message twice. A logical conclusion would be that I tried to see if it gave different results if prompted the same way twice. However, it is more likely I just made a copy paste error and accidentally send the wrong text the second time. So the person reading the logs is doing a lot of work here). Ignoring all that he also didnt think of the next case: people using an llm to fake chatlogs to optimize being hired. Good way to hire a lot of North Koreans.

This is offensively stupid lol

He’s become the Linus Pauling of video games.

definitely taking Vitamin L

Found a new and lengthy sneer on Bluesky, mocking the promptfondlers’ gullibility.

The Great Leader himself, on how he avoids going insane during the onging End of the World because among other things that’s not what an intelligent character would do in a story, but you might not be capable of that.

It’s very meta for Yud to write a story all about how the story isn’t all about himself.

I forgot to mention it last week, but this is Scott Adams shit. The stuff which made him declare that Trump would win in a landslide in 2016 due to movie rules. Iirc he also claimed he was right on that, despite Trump not winning in a landslide, the sort of goalpost moving bs which he judges others harshly for (despite in the other situations it not applying)

So up next, Yud will claim some pro AI people want him dead and after that Yud will try to convince people he can bring people to orgasm by words alone. I mean those are the ‘adamslike’ genre tropes now.

Saying that at age 46 you are proud of not reenacting tropes from fantasy novels you read when you were 9 is something special. “He’s the greatest single mind since L. Ron Hubbard.”

His OkCupid profile also showed a weak grasp on the difference between fantasy and reality.

Do we know when he transitioned from Margaret Weiss and Lawrence Watt-Evans to filthy Japanese cartoons?

Also discussed in last week’s Stubsack.

Screaming at the void towards Chuunibyou (wiki) Eliezer: YOU ARE NOT A NOVEL CHARACTER, THINKING OF WHAT BENEFITS THE NOVELIST vs THE CHARACTER HAS NO BEARING ON REAL LIFE.

Sorry for yelling.

Minor notes:

But <Employee> thinks I should say it, so I will say it. […] <Employee> asked me to speak them anyways, so I will.

It’s quite petty of Yud to be so passive-aggressive towards his employee insisted he at least try to discuss coping. Name dropping him not once but twice (although that is also likely to just be poor editing)

“How are you coping with the end of the world?” […Blah…Blah…Spiel about going mad tropes…]

Yud, when journalists ask you “How are you coping?”, they don’t expect you to be “going mad facing apocalypse”, that is YOUR poor imagination as a writer/empathetic person. They expect you to be answering how you are managing your emotions and your stress, or bar that give a message of hope or of some desperation, they are trying to engage with you as real human being, not as a novel character.

Alternatively it’s also a question to gauge how full of shit you may be. (By gauging how emotionally invested you are)

The trope of somebody going insane as the world ends, does not appeal to me as an author, including in my role as the author of my own life. It seems obvious, cliche, predictable, and contrary to the ideals of writing intelligent characters. Nothing about it seems fresh or interesting. It doesn’t tempt me to write, and it doesn’t tempt me to be.

Emotional turmoil and how characters cope, or fail to cope makes excellent literature! That all you can think of is “going mad”, reflects only your poor imagination as both a writer and a reader.

I predict, because to them I am the subject of the story and it has not occurred to them that there’s a whole planet out there too to be the story-subject.

This is only true if they actually accept the premise of what you are trying to sell them.

[…] I was rolling my eyes about how they’d now found a new way of being the story’s subject.

That is deeply Ironic, coming from someone who makes choice based on him being the main character of a novel.

Besides being a thing I can just decide, my decision to stay sane is also something that I implement by not writing an expectation of future insanity into my internal script / pseudo-predictive sort-of-world-model that instead connects to motor output.

If you are truly doing this, I would say that means you are expecting insanity wayyyyy to much. (also psychobabble)

[…Too painful to actually quote psychobabble about getting out of bed in the morning…]

In which Yud goes in depth, and self-aggrandizing nonsensical detail about a very mundane trick about getting out of bed in the morning.

They expect you to be answering how you are managing your emotions and your stress, or bar that give a message of hope or of some desperation, they are trying to engage with you as real human being, not as a novel character.

does EY fail to get that interview isn’t for him, but for audience? if he wants to sway anyone, then he’d need to adjust what he talks about and how, otherwise it just turns into a circlejerk

Yud, when journalists ask you “How are you coping?”, they don’t expect you to be “going mad facing apocalypse”, that is YOUR poor imagination as a writer/empathetic person. They expect you to be answering how you are managing your emotions and your stress, or bar that give a message of hope or of some desperation, they are trying to engage with you as real human being, not as a novel character.

I think the way he reads the question is telling on himself. He knows he is sort of doing a half-assed response to the impending apocalypse (going on a podcast tour, making even lower-quality lesswrong posts, making unworkable policy proposals, and continuing to follow the lib-centrist deep down inside himself and rejecting violence or even direct action against the AI companies that are hurling us towards an apocalypse). He knows a character from one of his stories would have a much cooler response, but it might end up getting him labeled a terrorist and sent to prison or whatever, so instead he rationalizes his current set of actions. This is in fact insane by rationalist standards, so when a journalist asks him a harmless question it sends him down a long trail of rationalizations that include failing to empathize with the journalist and understand the question.

The trope of somebody going insane as the world ends, does not appeal to me as an author, including in my role as the author of my own life. It seems obvious, cliche, predictable, and contrary to the ideals of writing intelligent characters. Nothing about it seems fresh or interesting. It doesn’t tempt me to write, and it doesn’t tempt me to be.

When I read HPMOR, which was years ago before I knew who tf Yud was and I thought Harry was intentionally written as a deeply flawed character and not a fucking self-insert, my favourite part was when Hermione’s death. Harry the goes into grief that he is unable to cope with, disassociating to such an insane degree he stops viewing most other people as thinking and acting individuals. He quite literally goes insane as his world - his friend and his illusion of being the smartest and always in control of the situation - ended.

Of course now in hindsight I know this is just me inventing a much better character and story, and Yud is full of shit, but I find it funny that he inadvertently wrote a character behave insanely and probably thought he’s actually a turborational guy completely in control of his own feelings.

I feel like this is a really common experience with both HPMoR and HP itself, and explains a large part of the positive reputation they enjoy(ed).

Yud seems to have the same conception of insanity that Lovecraft did, where you learn too much and end up gibbering in a heap on the floor and needing to be fed through a tube in an asylum or whatever. Even beyond the absurdity of pretending that your authorial intent has some kind of ability to manifest reality as long as you don’t let yourself be the subject (this is what no postmodernism does to a person), the actual fear of “going mad” seems fundamentally disconnected from any real sense of failing to handle the stress of being famously certain that the end times are indeed upon us. I guess prophets of doom aren’t really known for being stable or immune to narcissistic flights of fancy.

the actual fear of “going mad” seems fundamentally disconnected from any real sense of failing to handle the stress of being famously certain that the end times are indeed upon us

I think he actually is failing to handle the stress he has inflicted on himself, and that’s why his latest few lesswrong posts hadreally stilted poor parables about Chess and about alien robots visiting earth that were much worse than classic sequences parables. And why he has basically given up trying to think of anything new and instead keeps playing the greatest lesswrong hits on repeat, as if that would convince anyone that isn’t already convinced.

Having a SAN stat act like an INT (IQ) stat is very on brand for rationalists (except ofc the INT stat is immutable duh)

I learned too much and started having (more) trouble getting out of bed in the morning, does that count?

Hitting the konbini in the wee hours for strong zeros, lolicon mags and pawahara ice cream

stay with meeeeeeeee~~~🎶

~~In the dead of night, knocking on the door~~

“How do you keep yourself from going insane?”

“I tell myself I’m a character from a book who comes to life and is also a robot!” (Hubert Farnsworth giggle)

“I like to dissociate completely! Wait, what was the question?”

The first and oldest reason I stay sane is that I am an author, and above tropes.

Nobody is above tropes. Tropes are just patterns you see in narratives. Everything you can describe is a trope. To say you are above tropes means you don’t live and exist.

Going mad in the face of the oncoming end of the world is a trope.

Not going mad as the world ends is also a trope, you fuck!

This sense – which I might call, genre-savviness about the genre of real life – is historically where I began; it is where I began, somewhere around age nine, to choose not to become the boringly obvious dramatic version of Eliezer Yudkowsky that a cliche author would instantly pattern-complete about a literary character facing my experiences.

We now have a canon mental age for Yud of drumroll nine.

Just decide to be sane

That isn’t how it works, idiot. You can’t “decide to be sane”, that’s like having a private language.

Anyway, just to make the subtext of my other comments into text. Acting like you are a character in a story is a dissociative delusion and counter to reality. It is definitively not sane. Insane, if you will.

Just decide to be sane, sanity is a skill issue

Yud has officially cured mental health forever, psychologists and therapists in shambles

Joke’s on him, Know-Nothing Know-It-All is also a trope.

they really thonk that people work just like chatbots, are they

Eliezer’s latest book says humans and chatbots are both “sentence-producing machines” so yes

Its the most obvious explanation for their behaviour I can think of.

To say you are above tropes means you don’t live and exist.

To say you are above tropes is actually a trope

Followup:

Look, the world is fucked. All kinds of paradigms we’ve been taught have been broken left and right. The world has ended many times over in this regard. In place of anything interesting or helpful to address this, Yud’s encoded a giant turd into a blog post. How to stay sane? Just stay sane, bro. Easy to say if the only thing threatening your worldview is a made-up robodemon that will never exist.

Here’s Yud’s actually-quite-easy-to-understand suggestions:

- detach from reality by pretending you are a character in a story as a coping mechanism.

- assume no personal responsibility or agency.

- don’t go insane, i.e. make sure you try and fulfil society’s expectations of what sanity is.

All of these are terrible. In general, you want to stay grounded in reality, be aware of the agency you have in the world, and don’t feel pressured to performatively participate in society, especially if that means doing arbitrary rituals to prove that you are “sane”.

Here are my thoughts on “how to stay sane” and “how to cope”:

It’s entirely reasonable to crash out. I don’t want anyone to go insane, but fucking look at all this shit. Datacenters are boiling the oceans. Liberalism is starting its endgame into fascism. All the fucking genocides! Dissociating is acceptable and expected as an emotional response. All of this has been happening in (modern) human history to a degree where crashing out has been reasonable. Yet, many people have been able to “stay sane” in the face of this. If you see someone who appears to be sane, either they’re fucked in the head, or they have some perspective or have built up some level of resilience. Whether or not those things can be helpful to someone else is not deterministic. If you are someone who has “stayed sane”, please remember to show some empathy and some awareness that it’s fine if someone is miserable, because again, everything is fucked.

Putting the above together, I accept basically any reaction to the state of the world. It’s reasonable to go either way, and you shouldn’t feel bad either way. “Sanity” has different meanings depending on where you look. I think there’s a common, unspoken definition that basically boils down to “a sane person is someone who can productively participate in society.” This is not a standard you always need to hold yourself to. I think it’s helpful to introspect and, uh, “extrospect”, here. Like, figure out what you think it means to be sane, what you want it to mean, and what you want. And bounce these ideas off of someone else, because that usually helps.

I think there is another common definition of sanity that might just be “mentally healthy”. To that end, things that have helped me, aside from therapy, that aren’t particularly insightful or unique:

- Talking to friends

- Finding places to talk about the world going to shit.

- Participating in community, online or irl.

- Basically just finding spaces where stupid shit gets dunked on.

- Leftist meme pages

I mean, is that so fucking hard to say?

One part in particular pissed me off for being blatantly the opposite of reality

and remembering that it’s not about me.

And so similarly I did not make a great show of regret about having spent my teenage years trying to accelerate the development of self-improving AI.

Eliezer literally has multiple sequence about his foolish youth where he nearly destroyed the world trying to jump straight to inventing AI instead of figuring out “AI Friendliness” first!

I did not neglect to conduct a review of what I did wrong and update my policies; you know some of those updates as the Sequences.

Nah, you learned nothing from what you did wrong and your sequence posts were the very sort of self aggrandizing bullshit you’re mocking here.

Should I promote it to the center of my narrative in order to make the whole thing be about my dramatic regretful feelings? Nah. I had AGI concerns to work on instead.

Eliezer’s “AGI concerns to work on” was making a plan for him, personally, to lead a small team, which would solve meta-ethics and figure out how to implement these meta-ethics in a perfectly reliable way in an AI that didn’t exist yet (that a theoretical approach didn’t exist for yet, that an inkling of how to make traction on a theoretical approach for didn’t exist yet). The very plan Eliezer came up with was self aggrandizing bullshit that made everything about Eliezer.

tl;dr i don’t actually believe the world is going to end but more importantly i’m Ender Wiggin

❌: Ender Wiggin

✅: End Wiggin’

Disney invests $1B into OpenAI with allowing access to all Disney characters

https://thewaltdisneycompany.com/disney-openai-sora-agreement/

Of course Disney loves its cease and desists such as one to character.ai in October and one today to Google: https://variety.com/2025/digital/news/disney-google-ai-copyright-infringement-cease-and-desist-letter-1236606429/

Is this actually because of brand protection or just shareholder value? Racist, sexist, and all around abhorrent content is now easily generated with your favorite Disney owned characters just as long as you do it on the approved platform.

So the code red is over now, I guess?!?!

(who ordered the code red.gif)

Remember the flood of offensive Pixaresque slop that happened in 2023? We’re gonna see something similar thanks to this deal for sure.

Somebody on bsky mentioned this is prob because Disney wants to be seen on the stockmarket as a tech company, and not a cartoon/theme park company.

(See how Tesla went from cars to self driving to now robots)

Patrick Boyle on YouTube has a breakdown of the breakdown of the Microstrategy flywheel scheme. Decent financial analysis of this nonsense combined with some of the driest humor on the internet.

can wallstreet cook a potato so hot that even they wouldn’t be able to hold it?

got jumpscared by bubble mention in least likely place i’ve expected, weekly defense economics powerpoint:

I got jumpscared by Gavin D. Howard today; apparently his version of

bcappeared on my system somehow, and his name’s in the copyright notice. Who is Gavin anyway? Well, he used to have a blog post that straight-up admitted his fascism, but I can’t find it. I could only find, say, the following five articles, presented chronologically:- Free Speech and Pronouns

- Israel is Not an Apartheid State, featuring Denis “No U” Prager

- My Thought Process Regarding Vaccines

- Intermission: This comment on Lobsters leads to this ban reason on Lobsters

- No More Skittles, featuring Libs of “TikTok” TikTok

- I Am Divorced

Also, while he’s apparently not caused issues for NixOS maintainers yet, he’s written An Apology to the Gentoo Authors for not following their rules when it comes to that same

bcpackage. So this might be worth removing for other reasons than the Christofascist authorship.BTW his code shows up because it’s in upstream BusyBox and I have a BusyBox on my system for emergency purposes. I suppose it’s time to look at whether there is a better BusyBox out there. Also, it looks like Denys Vlasenko has made over one hundred edits to this code to integrate it with BusyBox, fix correctness and safety bugs, and improve performance; Gavin only made the initial commit.

Pretty sure this guy has some serious mental health issues.

OpenAI Declares ‘Code Red’ as Google Threatens AI Lead

I just wanted to point out this tidbit:

Altman said OpenAI would be pushing back work on other initiatives, such as advertising, AI agents for health and shopping, and a personal assistant called Pulse.

Apparently a fortunate side effect of google supposedly closing the gap is that it’s a great opportunity to give up on agents without looking like complete clowns. And also make Pulse even more vapory.

Is Pulse the Jony Ive device thing? I had half a suspicion that will never come to market anyway.

Some rando mathematician checks out some IQ related twins studies and find out (gasp) that Cremieux is a manipulative liar with an agenda

https://davidbessis.substack.com/p/twins-reared-apart-do-not-exist

Said author is sad that Paul Graham retweets the claim and does not draw the obvious conclusion that PG is just a bog-standard Silicon Valley VC pseudo-racist.

HN is not happy and a green username calling themselves “jagoff” leads the charge

Honestly I’m kinda grateful for people who dig into and analyze the actual data in seeming ignorance of the political context of the people pushing the other side. It’s one thing to know that Jordan “Crimieux” Lasker and friends are out here doing Deutsch Genetik and another to have someone cleanly and clearly lay out exactly why they’re wrong, especially when they do the work of assembling a more realistic and useful story about the same studies.

Very good read actually.

Except, from the epilogue:

People are working to resolve [intelligence heritability issue] with new techniques and meta-arguments. As far as I understand, the frontline seems to be stabilizing around the 30-50% range. Sasha Gusev argues for the lower end of that band, but not everyone agrees.

The not-everyone-agrees link is to acx and siskind’s take on the matter, who unfortunately seems to continue to fly under the radar as a disingenuous eugenicist shitweasel with a long-term project of using his platform to sane-wash gutter racists who pretend at doing science.

Yeah, the substacker seems either naive or genuinely misinformed about Siskind’s ultimate agenda, but in their defense Scott is really really good at vomiting forth torrents of beige prose that obscure it.

Here’s the bottom line: I have no idea what motivated Cremieux to include Burt’s fraudulent data, but even without it his visual is highly misleading, if not manipulative

Well, the latter part of this sentence gives a hint at the actual reason.

And the first comment is by Hanania lol, trying to debunk the fraud allegations by saying that is just how things were done back then. While also not realizing he didnt understand the first part of the article. Amazing how these iq anon guys always react quick and to everything. Also was quite an issue on Reddit, where just a small dismissal of IQ could lead to huge (copy pasted) rambling defenses of IQ.

The author is also calling Richard out on his weird framing.

It’s fascinating to watch Hanania try and do politics in a comment space more focused on academic inquiry, and how silly he looks here. He can’t participate in this conversation without trying to make it about social interventions and class warfare (against the poor), even though I don’t know that Bessis would disagree that the thing social interventions can’t significantly increase the number of mathematical or scientific geniuses in a country (1). Instead, Hanania throws out a few brief, unsupported arguments, gets asked for clarification and validation, accuses everyone of being woke, and gets basically ignored as the conversation continues around him.

This feels like the kind of environment that Siskind and friends claim to be wanting to create, but it feels like they’re constitutionally incapable of actually doing the “ignore Nazis until they go away” part.

- From his other post linked in the thread he credits that level of aptitude to idiosyncratic ways of thinking that are neither genetically nor socially determined, but can be cultivated actively through various means. The reason that the average poor Indian boy doesn’t become Ramanujan is the same reason you or I or his own hypothetical twin brother didn’t; we’re not Ramanujan. This doesn’t mean that we can’t significantly improve our own ability to understand and use mathematical thinking.)

It might have already been posted here, but this Wikipedia guide to recognizing AI slop is such a good resource.

Although I never use LLMs for any serious purpose, I do sometimes give LLMs test questions in order to get firsthand experience on what their responses are like. This guide tracks quite well with what I see. The language is flowery and full of unnecessary metaphors, and the formatting has excessive bullet points, boldface, and emoji. (Seeing emoji in what is supposed to be a serious text really pisses me off for some reason.) When I read the text carefully, I can almost always find mistakes or severe omissions, even when the mistake could easily be remedied by searching the internet.

This is perfectly in line with the fact that LLMs do not have deep understanding, or the understanding is only in the mind of the user, such as with rubber duck debugging. I agree with the “Barnum-effect” comment (see this essay for what that refers to).

A fairly good and nuanced guide. No magic silver-bullet shibboleths for us.

I particularly like this section:

Consequently, the LLM tends to omit specific, unusual, nuanced facts (which are statistically rare) and replace them with more generic, positive descriptions (which are statistically common). Thus the highly specific “inventor of the first train-coupling device” might become “a revolutionary titan of industry.” It is like shouting louder and louder that a portrait shows a uniquely important person, while the portrait itself is fading from a sharp photograph into a blurry, generic sketch. The subject becomes simultaneously less specific and more exaggerated.

I think it’s an excellent summary, and connects with the “Barnum-effect” of LLMs, making them appear smarter than they are. And that it’s not the presence of certain words, but the absence of certain others (and well content) that is a good indicator of LLM extruded garbage.

Also, you can one-step explain from this guide why people with working bullshit detectors tend to immediately clock LLM output, vs the executive class whose whole existence is predicated on not discerning bullshit being its greatest fans. A lot of us have seen A Guy In A Suit do this, intentionally avoid specifics to make himself/his company/his product look superficially better. Hell, the AI hype itself (and the blockchain and metaverse nonsense before it) relies heavily on this - never say specifics, always say “revolutionary technology, future, here to stay”, quickly run away if anyone tries to ask a question.

My feeling has gotten that I prefer the business executive empty vs the LLM empty, at least the first one usually expresses personality. It’s never entirely empty.

Doing a quick search, it hasn’t been posted here until now - thanks for dropping it.

In a similar vein, there’s a guide to recognising AI-extruded music on Newgrounds, written by two of the site’s Audio Moderators. This has been posted here before, but having every “slop tell guide” in one place is more convenient.

“This has been posted here before, but having every “slop tell guide” in one place is more convenient.”

Man, this is why human labour still reigns supreme. It’s such a small thing to consider the context in which these resources would be useful and to group together related resources as you have done here, but actions like this are how we can genuinely construct new meaning in the world. Even if we could completely eradicate hallucinations and nonspecific waffle in LLM output, they would still be woefully inept at this kind of task — they’re not good at making new stuff, for obvious reasons.

TL;DR: I appreciate you grouping these resources together for convenience. It’s the kind of mindful action that makes me think usefully about community building and positive online discourse.

It’s also the sort of thing that you wouldn’t actually think to ask for until it became quite hard to sort out. Creating this kind of list over time as good resources are found is much more practical and not the kind of thing would likely be automated.

Exactly! It’s basically a form of social informational infrastructure building

the fifth episode of odium symposium, “4chan: the french connection” is now up. the first roughly half of the episode is a dive into sartre’s theory of antisemitism. then we apply his theory to the style guide of a nazi news site and the life of its founder, andrew anglin

favorite one so far! It’s like graduate-level 1-900 Hotdog

Apparently you can ask gpt-5.2 to make you a zip of /home/oai and it will just do it:

https://old.reddit.com/r/OpenAI/comments/1pmb5n0/i_dug_deeper_into_the_openai_file_dump_its_not/

An important takeaway I think is that instead of Actually Indian it’s more like Actually a series rushed scriptjobs - they seem to be trying hard to not let the llm do technical work itself.

Also, it seems their sandboxing amounts to filtering paths that star with /.

They (or the LLM that summarized their findings and may have hallucinated part of the post) say:

It is a fascinating example of “Glue Code” engineering, but it debunks the idea that the LLM is natively “understanding” or manipulating files. It’s just pushing buttons on a very complex, very human-made machine.

Literally nothing that they show here is bad software engineering. It sounds like they expected that the LLM’s internals would be 100% token-driven inference-oriented programming, or perhaps a mix of that and vibe code, and they are disappointed that it’s merely a standard Silicon Valley cloudy product.

My analysis is that Bobby and Vicky should get raises; they aren’t paid enough for this bullshit.

By the way, the post probably isn’t faked. Google-internal

go/URLs do leak out sometimes, usually in comments. Searching GitHub for that specific URL turns up one hit in a repository which claims to hold a partial dump of the OpenAI agents. Here iscombined_apply_patch_cli.py. The agent includes a copy of ImageMagick; truly, ImageMagick is our ecosystem’s cockroach.OpenAi yearly payroll runs in the billions, so they probably aren’t hurting.

That Almsost AGI is short for Actually Bob and Vicky seems like quite the embarrassment, however.

regarding my take in previous stubsack, it does seem like crusoe intends to use these gas turbines as backup, and as of 31.07.2025 they had five turbines installed, who knows if connected, with obvious place for five more, with some pieces of them (smokestacks mostly) in place. it does make sense that as of october announcement, they had the first tranche of 10 installed or at least delivered. there’s no obvious prepared place where they intend to put next 19 of them, and that’s just stuff from GE, with more 21 coming from proenergy (maybe it’s for different site?). that said, it’s texas with famously reliable ercot, which means that on top using these for shortages, they might be paying market rates for electricity, which means that even with power available, they might turn turbines on when electricity gets ridiculously expensive. i’m sure that dispatchers will love some random fuckass telling them “hey, we’re disconnecting 250MW load in 15 minutes” when grid is already unstable due to being overloaded

linux tech issue:

My desktop computer started with just windows on it. No issues. A while back I dual installed linux mint and windows, and pretty frequently when rebooting I’d have to deal with the computer insisting that I run fsck. I ended up switching back to just windows and thereafter had no issues.

This week I installed ubuntu as my sole OS and it’s not going very well. I’ve had to reinstall the OS a few times already. After the install at first everything is normal, but slowly I start getting hints of things going wrong. Certain programs will just not start, that kind of thing. Eventually I restart the computer and it will present me with a demand I run fsck. I do so, and now tons of system files are gone, the install is effectively dead, and I’m back to square zero.

I don’t understand what’s going on. I used the same media to install ubuntu on my laptop, and that works perfectly. I’ve had a version of this problem across two distros. And for some reason it doesn’t occur with windows! Complete mystery. Anyone have ideas?